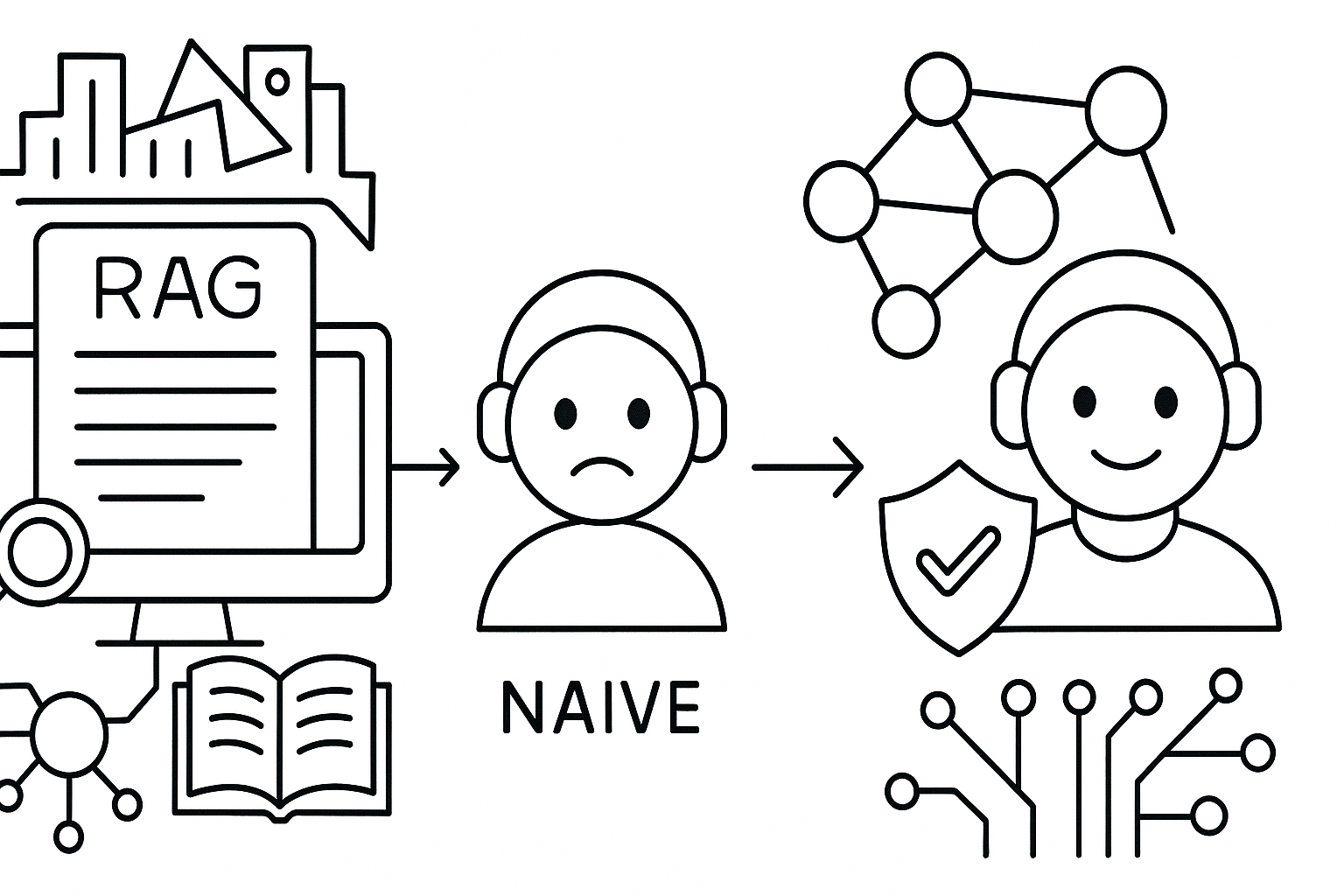

We’re all familiar with Retrieval-Augmented Generation (RAG) — the tech that lets chatbots answer questions using our internal knowledge base. But as data volume and complexity grow, classic “naive” RAG starts to fail. It mixes up contexts, provides conflicting answers, and hallucinates, unable to see the connections hidden between documents.

The problem isn’t the language model; it’s how we feed it information. The solution is a paradigm shift: moving from isolated text chunks to structured knowledge graphs that map the real-world relationships between the entities in your data.

In our new article, we take a deep technical dive into the next generation of RAG. We break down:

- Why classic vector search is a dead end for complex, interconnected questions.

- How graph-based approaches work, using Microsoft’s powerful GraphRAG as a prime example.

- How the new LightRAG framework achieves comparable — and sometimes better — answer quality while being hundreds of times cheaper to operate.

If you want to build an AI assistant that provides not just eloquent, but verifiable and logically sound answers, this deep dive is for you.

Leave a Reply

You must be logged in to post a comment.